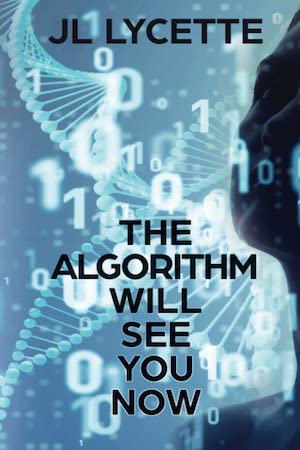

The Algorithm Will See You Now by Jennifer Lycette

Healthcare and Artificial Intelligence — two topics that seem to be ever-present in the news cycle. But what are these two seemingly separate matters doing in an exhilarating speculative thriller?

Well, it turns out that Jennifer Lycette’s The Algorithm Will See You Now (Black Rose Writing) isn’t as far-out as we may think. AI is already being used in the medical field, with programmers hoping that it will take on some of the burden of our overloaded healthcare system. But as Lycette — a medical professional in her own right — points out, employing such cutting-edge technology can come at a great cost.

Q: Where did you get the idea for this book?

A: In my day job, I’m a hematologist/oncologist (a specialist in blood and cancer medicine). During the 2010s, IBM’s Watson (a machine-learning AI) was used to attempt to better define cancer treatments. But after a few years, it fizzled out.

I got the book idea when I read about the mistakes AI tools were making (like the misclassification of photos on Google). After Watson Health’s failure, I wondered, what if we did one day achieve the goal of an advanced medical AI, but it turned out to be fundamentally flawed? Mix that with the increasing corporatization of healthcare, and my story was born. I suppose a classic trope of the science fiction thriller — exploring what fault lies in the technology versus what responsibility lies with humanity.

Q: You roll two hot topics into one — healthcare and the use of artificial intelligence. Tell us briefly the storyline for your book.

A: Medical treatment determined by artificial intelligence could do more than make Hope Kestrel’s career. It could revolutionize healthcare.

What the Seattle surgeon doesn’t know is the AI has a hidden flaw, and the people covering it up will stop at nothing to dominate the world’s healthcare — and its profits. Soon, Hope is made the scapegoat for a patient’s death, and only Jacie Stone, a gifted intern with a knack for computer science, is willing to help search for the truth.

But her patient’s death is only the tip of the conspiracy’s iceberg. The power of life and death decisions will fall into the hands of those behind the AI — even if its algorithm accidentally discards some who are treatable …

Q: Do you see artificial intelligence as a technology that will spur advances in our society and culture, or a technology that, when falling into the wrong hands, can do more harm than good?

A: Sadly, it’s already falling into the wrong hands. In just the past few months, two major stories have exposed the use of AI in Big Healthcare to put profits over patients.

First, a March 13, 2023, STAT News article: Denied by A.I.: How Medicare Advantage plans use algorithms to cut off care for seniors in need. This was an exposé on AI-driven denials of medical care coverage to seniors on Medicare Advantage.

The second was a March 25, 2023 article by ProPublica: How Cigna Saves Millions by Having Its Doctors Reject Claims Without Reading Them. This investigative report detailed how a major insurance company, Cigna, instructed its employed physicians to sign off on denials determined by an algorithm without any human review of the records.

Q: What kind of statement does the book make regarding our healthcare system?

A: Rather than a statement, what I hope is that it tells a story. In how the AI ultimately ends up affecting each character, readers can draw their own conclusions.

But if there is any statement, perhaps it’s that for AI, like any medical intervention, we must balance the risk of harm with the chance of benefit.

I’ve been gratified by readers’ responses so far to the antagonist. As has been said by others much more intelligent than me about writing, the villain thinks they’re the hero of the story. I had to dig deep and think about what experiences might produce a physician like Marah Maddox with her beliefs and motivations. Readers can then decide if they buy into her rationale for her actions.

Q: In the book description, this line appears: “The most dangerous lies are the ones that use the truth to sell themselves.” Explain the meaning of that sentence in the context of your book.

A: The story behind that line is that I actually first wrote it as part of an essay about healthcare grifters who take advantage of people with serious illnesses like cancer to sell fake cures. (I also write nonfiction essays and medical articles).

After I wrote the essay, the line stuck with me, and I thought, hey, I could use that in my book, too. Because it has similar underlying themes.

I gave the line to a character in the book who the readers first meet as an anonymous podcaster trying to expose the problems with the AI being developed by the fictional Seattle corporation “PRIMA,” Prognostic Intelligent Medical Algorithms.

Q: Tell us about Hope Kestrel, the Seattle surgeon and protagonist. What makes her tick? Was her character inspired by anyone?

A: Hope Kestrel came into being as a character who I knew would be a doctor who’d lost her belief in hope, yet she’s saddled with the name. She’s 29 and a senior surgical resident at PRIMA.

At eleven, she lost her mom to cancer, and she devoted her career to becoming a “better” kind of doctor, one who wouldn’t offer “false hope.” She embraces PRIMA to achieve that goal.

When she suspects PRIMA might not be everything she believes, Hope has to examine what that means for her career and confront the grief she’s avoided for eighteen years — and figure out if she’s strong enough to finally face it and how that’s going to change her (or not … no spoilers …)

Q: How did your background and experience help develop this story?

A: As an oncologist of twenty years, I have a keen sense of empathy and observation. I’ve spent half my career in rural practice, where, too often, patients don’t make it to me until too late. Lack of insurance, and under-insurance, play a prominent role in this.

I became a writer out of a driving need to explore the fundamental question of whether healthcare is a human right. (Spoiler: I believe it is).

I worry a lot about the power that corporations and insurance companies have over healthcare. In our present system, one serious medical issue can push a family into bankruptcy. I thought a lot about how this current brokenness will motivate the adoption of AI in healthcare, in both good ways and bad.

Q: What do you hope readers take away from this book?

A: I hope readers take away an understanding that healthcare is at a precipice.

It’s a bit wild to me that the book ended up being published in the middle of the current explosion of generative AI (e.g., ChatGPT). (I started writing it in late 2016, before public access to “chatbots”). I think because of the media’s attention to generative AI, people are quick to see the possibilities. Sure, it’s speculative fiction, but suddenly it doesn’t seem so farfetched.

I hope people will think about what they want from healthcare. From their doctors. What does it mean to keep the human in healthcare? I also hope they get a glimpse into the pressures on doctors and nurses and how the system can strip away their humanity.